SSP Forum: Jack Beasley and Kevin Tan (M.S. Candidates)

The

Symbolic Systems Forum

presents

Explanatory Power in Inference: Is it Useful?

Jack Beasley (M.S. Candidate)

Symbolic Systems Program

and

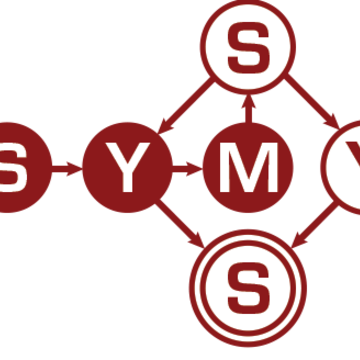

Object-Centric Visual Representation Learning

Kevin Tan (M.S. Candidate)

Symbolic Systems Program

Monday, April 19, 2021

2:30pm - 3:30 pm

Join from PC, Mac, Linux, iOS or Android (requires logging in to a Zoom account): https://stanford.zoom.us/j/99713091022?pwd=b0hna3VRUkFWWHl0TUEwcTRQYktwQT09

ABSTRACTS:

(1) Jack Beasley, "Explanatory Power in Inference: Is it Useful?" (Primary Advisor: Thomas Icard, Philosophy)

Explanatory reasoning, or the sort of reasoning invoked when determining how well a given hypothesis explains the observed phenomena, has long been a topic of interest in epistemology and philosophy of science. However, efforts to precisely specify what it means to be "explanatory" have not reached consensus and hard and fast rules for determining the explanatory power of a hypothesis have generally proved elusive. Recently, there has been significant interest in specifying explanatory power in terms of concrete probabilistic definitions. Such definitions allow for an extension of Bayesian inference where beliefs are updated not only on the basis of a prior and likelihood, but also on the basis of the explanatory power of the hypotheses over the observed data. In this talk, I'll present some of these measures of explanatory power, asses their efficacy through simulations of inference, and discuss what role explanatory power might play in a world of rapid advances in statistical inference techniques.

(2) Kevin Tan, "Object-Centric Visual Representation Learning" (Primary Advisor: Jiajun Wu, Computer Science Department)

There is much evidence in developmental psychology which suggests that objects, and the interactions between them, are the foundational building blocks of how we perceive the visual world. Object-centric representations are key in supporting high-level cognitive abilities such as causal reasoning, problem solving, language grounding, and physical understanding. However, many current machine learning methods are not constructed in a way that represents objects explicitly, which poses a threat to model interpretability and modularity across a variety of visual tasks. Motivated by these observations, we propose Scene Style Networks (SSN), which explicitly learns object-centric representations from pairs of scene graphs and images. Furthermore, we introduce the task of controllable image synthesis, which requires both control over the layout and texture of a synthesized image. We find that (1) explicitly separating the layout and texture generation pathways enable improved image synthesis results, and (2) our joint layout-texture latent space exhibits higher disentanglement compared to that of prior works. Based on these findings, we argue that building explicit object-centric representations into computer vision models is an essential step towards a holistic understanding of the visual world.